NXP has launched a new speech recognition engine within our Voice Intelligent Technology Portfolio. In this blog, we will explore what challenges developers face in embedded voice control designs, our new Speech to Intent Engine and how you can use it in your applications.

Make Your Voice Heard: The Challenges with Voice Commands in Embedded Systems

Devices with embedded voice control have been around for many years, culminating in recent years with the emergence of game-changing smart speakers from the likes of Amazon, Google and Apple, among others. These smart speakers revealed for the first time to end users the simplicity, utility and intuitive promise of voice-first devices. These devices leverage voice as a user interface (UI) as the primary or only UI of the device. Thanks mainly to smart speakers, which leverage cloud-based natural language understanding, end users of voice-first devices now have the reasonable expectation that a smart device can understand their natural language requests, queries and commands.

This expectation of natural language processing poses several issues for designers and end users alike, typically including the need for a constant, reliable internet connection along with substantial power consumption that goes along with an always-listening, always-connected device — not to mention the privacy concerns with such connected devices.

Recognizing the challenges faced by voice engines in embedded designs, NXP launched its latest offering in our Voice Intelligent Technology (VIT) Portfolio, the VIT Speech to Intent Engine. Learn more about

VIT S2I.

Local Voice Control Versus Cloud-Based Voice Control

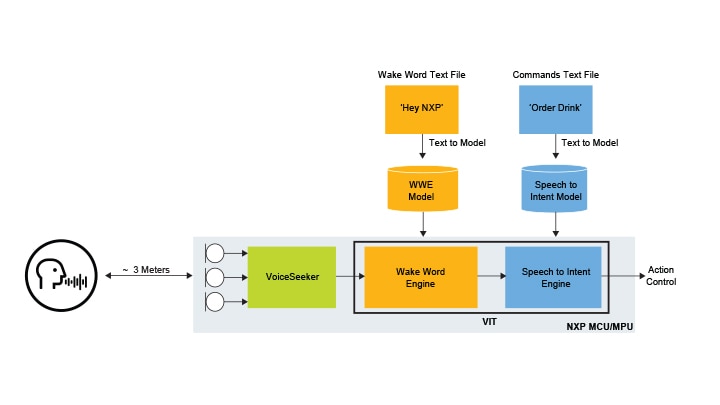

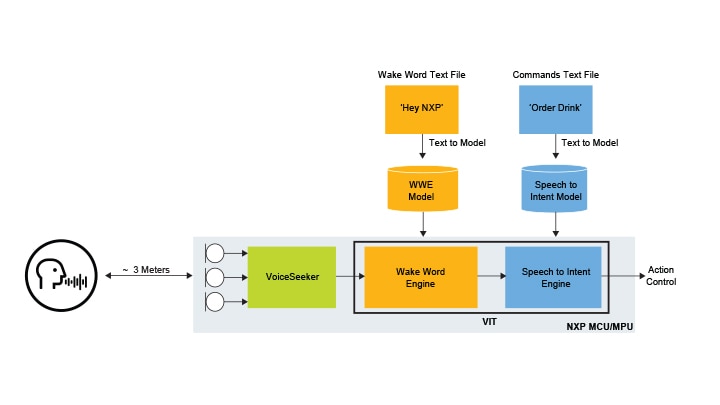

When it comes to implementing voice control on a device, engineers typically have three options available: local processing, cloud-based processing or some combination of the two, what we will call “hybrid processing”. With local voice control, a device processes all speech locally, at the edge, without the need to connect to the cloud or a remote server for secondary processing. Cloud-based processing is exactly what you’d expect — all voice audio goes to the cloud for processing and responses are generated by the cloud and sent to the device over the internet. In the case of hybrid processing, a local wake word engine will generally be used to wake up the device (such as “Hey NXP”), and then all voice commands after this wake word are streamed to the cloud or a remote server for processing.

Local processing offers the advantages of low latency, lower power consumption and independence from networks, however, it is often limited to basic keywords and commands that require precise wording or phrasing. For example, turning on a light may require the exact phrase “Hey, NXP (wake word), lights on (voice command)” to be said with no variation.

With cloud-based and hybrid systems, the use of cloud services introduces increased latency, but offers the advantage of being able to run extremely complex algorithms, including natural language understanding models. Thus, revisiting the prior example of turning on the lights, any combination of words can be used, and the system will still understand the context of what is being asked such as “It’s dark in here, please turn on the lights”.

As mentioned earlier, a major drawback of cloud-based natural language processing is security and privacy implications. Simply put, by design, voice audio streams are sent over the internet to a remote server, therefore, it is possible for such systems to accidentally turn on and stream unintended audio to the cloud. These audio streams could include personal conversations, credentials or other sensitive information.

Introducing NXP’s Voice Intelligent Technology (VIT) Speech to Intent (S2I) Engine

Recognizing the challenges faced by voice engines in embedded designs, NXP launched its latest offering in our Voice Intelligent Technology (VIT) Portfolio, the VIT Speech to Intent Engine (S2I). The S2I Engine is a premium offering of the VIT portfolio, which also includes a free Wake Word Engine (WWE) and Voice Command Engine (VCE).

Unlike systems that rely on remote cloud services, VIT S2I is able to locally determine the intent of naturally spoken language. This feature is possible thanks to NXP's latest developments with neural network algorithms and machine learning models designed to work on embedded systems. As such, intents like “Turn on the lights” can be said in numerous different ways, such as “Lights on”, “It’s too dark” and “Can you make it brighter”.

This Speech to Intent capability makes interacting with embedded systems far more natural, while simultaneously reducing system latency and power consumption of cloud connected systems. Additionally, eliminating cloud services also helps improve security and privacy as all voice processing is handled locally on the device. Furthermore, when combined with an NXP Wake Word Engine, it’s possible to develop ultra-low power designs that wait for a specific wake word before engaging the VIT S2I engine for processing spoken commands.

VIT S2I is supported on several NXP devices including: Arm® Cortex®-M: i.MX RT Crossover MCUs and RW61x MCUs, and Cortex A i.MX 8M Mini, i.MX 8M Plus, and i.MX 9x applications processors. VIT S2I currently supports English with Mandarin and Korean to follow in late 2023. Online developer tools for creating custom commands and training models are scheduled to be released in 2024.

VIT Speech to Intent Block Diagram

VIT Speech to Intent Block Diagram

To better experience, download the

image.

How VIT Speech to Intent Can Give Voice to Your Next Design

As developments in the IoT segment continue, VIT S2I will be ideal for home automation and wearable electronics along with other applications like automotive telematics and building controls. The ability to control basic device functions entirely hands-free via naturally spoken language will continue to grow in popularity with consumers, and the elimination of cloud services for processing speech at the edge not only reduces system latency but reduces privacy and security concerns.

VIT S2I systems will become more important in devices where the convenience of a voice-first user interface is desirable, including smart thermostats, smart appliances, home automation, lighting controls, shade control and more. Wearables and fitness devices can also use VIT S2I, with some use cases including setting up reminders, controlling Bluetooth devices and monitoring health.

Enhance Your Applications with NXP's VIT Portfolio

For those looking to start developing with NXP’s Voice Intelligent Technology Portfolio, get started today with our free VIT Wake Word and Voice Command Engines available through the MCUXpresso SDK and online model tool . These engines are excellent for easily customized wake words and basic voice control for rapid prototyping and quick development cycles where natural language understanding isn’t required.

If your application requires more natural language understanding functionality, contact your local NXP representative to get started with VIT Speech to Intent.

Learn more about NXP’s Voice Processing Portfolio and explore our VIT Speech to Intent Demos.