For many developers, especially those creating applications that run in data centers and on smartphones, software containers

have become a familiar part of the development process.

Containers are used extensively in software pipelines, particularly those associated with cloud-native architectures, to make

application code more portable. Containers are lightweight packages of software that include all the necessary elements — such

as binary executables, libraries, utilities, data and configuration files — to run in any environment, so they decouple

application code from the hardware, operating system and other infrastructure elements the app runs on.

Using a standardized, dedicated package to house the app means developers can focus on app functionality and performance without

having to concern themselves with the underlying infrastructure. This makes it easier to use standardized tools, deploy

resources more efficiently and reduce the presence of manual errors, ultimately saving time by giving developers more

streamlined ways to update and release new iterations of software.

Too Big and Inefficient for Embedded

Containers are a go-to resource for developers working with 64-bit microprocessors and general-purpose Linux and Android

operating systems, but for developers working in embedded, where 32-bit microcontrollers and real-time operating systems (RTOSs)

are the norm, containers have generally been too bulky and too inefficient to be a viable option.

And that means the many benefits of containers — increased portability, greater scalability, faster time-to-market — have also

been beyond the reach of many embedded systems.

But this is changing. Containers are beginning to break what some call the Linux/Android barrier, with new versions that are

tailored for use with smaller CPUs running an RTOS.

Take the Next Step. To learn more about how NXP and MicroEJ are helping developers use containers to enhance

embedded, visit the

MicroEJ - NXP

portal.

Optimized for Embedded

Modifying containers to enable an infrastructure-agnostic, scalable execution environment in embedded environments requires an

extensive redesign. The underlying structure has to change, with updated support for real-time operation and the ability to run

in a constrained memory environment — all while maintaining the security, low power and longevity needed for so many embedded

applications.

One company that has succeeded in bringing containers to embedded is

MicroEJ

(pronounced “micro-edge”), an IoT-focused software vendor with deep expertise in a broad range of embedded use cases that run on

the edge, including smart home, wearables, medical devices, industrial processes, building automation and more.

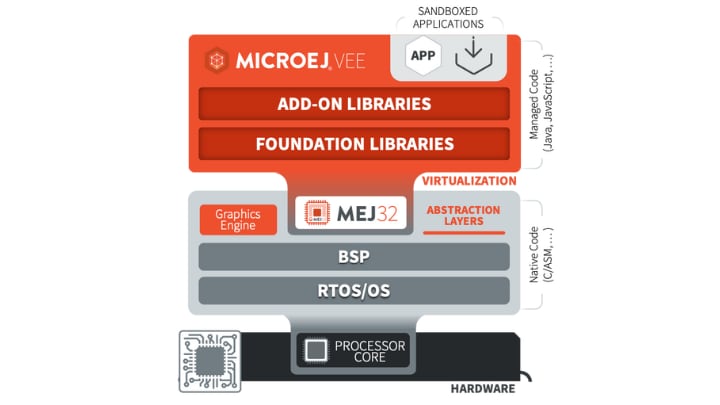

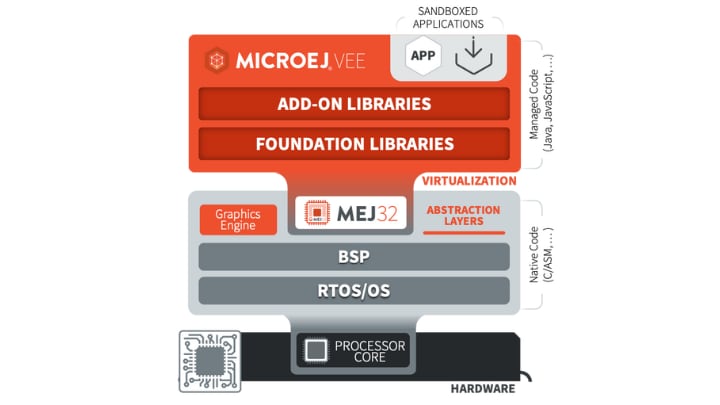

MicroEJ’s Virtual Execution Environment (VEE) is a container for embedded systems, optimized for use with 32-bit architectures

that depend on the critical time constraints of an RTOS. Occupying only 30 to 40 kilobytes of memory, MicroEJ VEE brings the

features and capabilities of large containers to the embedded environment.

MicroEJ’s Virtual Execution Environment (VEE), a container for embedded systems

MicroEJ’s Virtual Execution Environment (VEE), a container for embedded systems

NXP Platinum Partner

MicroEJ is one of

NXP’s platinum partners, meaning they collaborate closely with NXP to enrich the enablement and implementation of system-level solutions. With the

MicroEJ VEE software container running on NXP silicon, customers can port their application more easily across the complete

range of NXP’s 32-bit hardware models, including the

i.MX RT1050,

i.MX RT500 ,

i.MX RW600,

i.MX6 and more.

Successful co-development of these NXP-tailored containers ensures the appropriate level of virtualization and results in high

performance, low power consumption, enhanced security, scalability and binary portability across the NXP portfolio. NXP is

enhancing the native value of MicroEJ by adding its own specialized foundational libraries.

The result is an approach to embedded design that enables reliable operation across a wide range of hardware and operating

systems, while making development both more cost-effective and more focused on innovation.

Newfound FRDM for Embedded

Being able to use containers with 32-bit architectures brings new levels of freedom and flexibility to embedded design. Firmware

is no longer tied to specific devices and uses a standardized package, making it easier to reuse code and collaborate within an

ecosystem.

Using containers also makes it easier to add features that use machine learning or artificial intelligence, such as object

detection, voice recognition and data filtering, while also adding the necessary protections to keep these new features private

and secure.

Breaking down monolithic applications into separate components, using containers, further eases the implementation of service

components on hardware, in what’s called “servitization,” without jeopardizing intellectual property or compromising security.

Containers also support broader scalability, making it possible to maintain and manage a portfolio of complex devices that can

evolve over time.

The NXP/MicroEJ combination can be used for platforming, to extend offerings from Linux- and Android-based formats to those

running an RTOS, and can be used to bring “smartphone-like” capabilities into the embedded segment. Either way, the approach

creates a continuum across the NXP edge computing portfolio, for lower development costs and faster time-to-market.

Real-World Examples

A number of companies have already adopted the combined NXP/MicroEJ approach as a way to accelerate digitization and introduce

servitization solutions.

-

In one example, a consumer-electronics manufacturer uses it to streamline its processes by using a consistent interface and

connectivity component across their widely different product categories

-

A leader in industrial uses a MicroEJ container to customize its products at the end of production and in the field, enabling

hyper-segmentation to address niche markets

-

A customer in the energy sector is leveraging a containerized app to create a service ecosystem around their flagship product

and monetizing an app and services with utilities and end users

From consumer electronics to industrial, the MicroEJ VEE can be used in a wide set of applications

From consumer electronics to industrial, the MicroEJ VEE can be used in a wide set of applications

Take the Next Step

By making containers available to embedded systems and integrating cloud-native principles and virtualization, NXP is

delivering benefits that, up to now, have been unavailable to those working on resource-constrained systems. To learn more about

how NXP and MicroEJ are helping developers use containers to enhance embedded, visit the

MicroEJ - NXP portal.