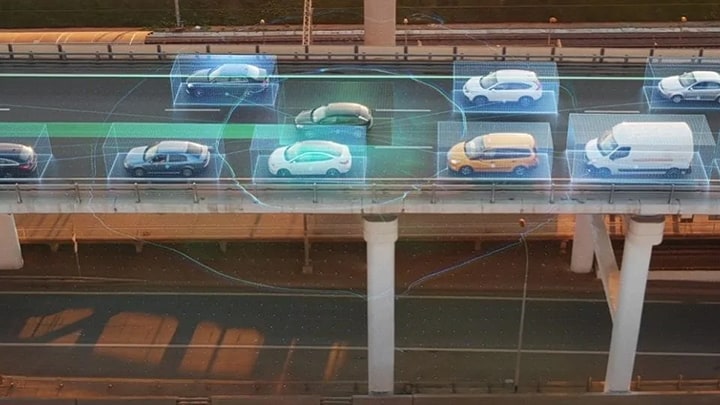

Vehicle autonomy and driver assistance systems rely on a combination of a

balanced mix of technologies: RADAR (RAdio Detection And Ranging), LiDAR

(LIght Detection And Ranging), cameras and V2X (vehicle -to-everything)

communications. These technologies often have overlapping capabilities, but

each has its own strengths and limitations.

RADAR has been used in automotive for decades and can determine the

velocity, range and angle of objects.

It is computationally lighter than other sensor technologies and can work in

almost all environmental conditions.

RADAR sensors can be classified per their operating distance ranges: Short

Range Radar (SRR) 0.2 to 30m range, Medium Range Radar (MRR) in the 30-80m

range and Long Range Radar (LRR) 80m to more than 200m range.

Long Range Radar (LRR) is the defacto sensor used in Adaptive Cruise Control

(ACC) and highway Automatic Emergency Braking Systems (AEBS). Currently

deployed systems using only LRR for ACC and AEBS have limitations and might

not react correctly to certain conditions, such as a car cutting in front of

your vehicle, detecting thin profile vehicles such as motorcycles being

staggered in a lane and setting distance based on the wrong vehicle due to the

curvature of the road. To overcome the limitations in these examples, a radar

sensor could be paired with a camera sensor in the vehicle to provide

additional context to the detection.

LiDAR sensors measure the distance to an object by calculating the time

taken by a pulse of light to travel to an object and back to the sensor.

Placed atop a vehicle, LiDAR can provide a 360° 3D view of the obstacles

that a vehicle should avoid. Because of this capability, LiDAR has been the

darling of autonomous driving since the

2007 DARPA Autonomous Driving Challenge.

Since then, LiDAR sensors have had great size and cost reductions, but some of

the more widely used and recognized models still cost a lot more than radar or

camera sensors, and some even cost more than the vehicle they are mounted on.

LiDAR in automotive systems typically use 905nm wavelength that can provide up

to 200m range in restricted FOVs and some companies are now marketing 1550nm

LiDAR with longer range and higher accuracy.

It is important to note that LiDAR requires optical filters to remove

sensitivity to ambient light and to prevent spoofing from other LiDARs. It is

also important to note that the laser technology used has to be

“eye-safe”. More recently the move has been to replace

mechanical scanning LiDAR, that physically rotate the laser and receiver

assembly to collect data over an area that spans up to 360° with Solid

State LiDAR (SSL) that have no moving parts and are therefore more reliable

especially in an automotive environment for long term reliability. SSLs

currently have lower field-of-view (FOV) coverage but their lower cost

provides the possibility of using multiple sensors to cover a larger area.

Cameras: Unlike LiDAR and RADAR, most automotive cameras are passive

systems.

The camera sensor technology and resolution play a very large role in the

capabilities. Cameras, similar to the human eye, are susceptible to adverse

weather conditions and variations in lighting. But cameras are the only sensor

technology that can capture texture, color and contrast information and the

high level of detail captured by cameras allow them to be the leading

technology for classification. These features, combined with the

ever-increasing pixel resolution and the low-price point, make camera sensors

indispensable and volume leader for ADAS and Autonomous systems.

Some examples from the ADAS application level evolutions enabled by cameras

are:

-

Adaptive Cruise Control (ACC): currently consistently detect full-width

vehicles like cars and trucks, these need to be able to classify a

motorcycle and keep distance.

-

Automatic High Beam Control (AHBC): currently do high-low beam switching and

need to evolve to be able to detect oncoming vehicle(s) and contour the ray

of light accordingly.

-

Traffic Sign Recognition (TSR): current systems recognize speed limits and

various limited subset of signs. Future systems need to understand

supplemental signs and context, (Speed limit in effect 10am to 8pm”)

detect traffic signals to adapt ACC , stop, slow down etc.

-

Lane Keep Systems (LKS): currently detect lane markings, future systems need

to detect drivable surface, adapt to construction signs and multiple lane

markings.

Inside the cabin, driver monitoring and occupancy tracking for safety is being

joined by gesture recognition and touchless controls. Including adding context

to the gesture recognition based on gaze tracking. And for AV systems driver

monitoring takes on the added use of checking if the driver is prepared to

re-take control if needed.

V2X: Extend what your car can “see.”

Beyond sensor-based radar, LIDAR and camera technologies, true

autonomy will require the vehicle to communicate in a multi-agent real-time

environment. This is where vehicle-to-everything (V2X) communications comes

in. V2X allows you to “see” even further than what’s

nearby, around curves, around other vehicles, through the dense urban

environment and even up to a mile away. With V2X (vehicle-to-everything)

communications, cars can “talk” to other cars, motorcycles,

emergency vehicles, traffic lights, digital road signs and pedestrians, even

if they are not directly within the car’s direct line-of-sight.

As two or more things enabled with V2X come into range, they form an ad-hoc

wireless network that allows them to automatically transmit and send real-time

short, dedicated safety-critical messages to each other.

All four technologies have their strengths.

To

guarantee safety on the road, we need redundancy in the sensor technologies

being used. Camera systems provide the most application coverage and color and

texture information so camera sensor counts in vehicles are projected to see

the largest volume growth close to 400 million units by 2030. While LiDAR

costs are coming down, so is the cost of radar systems. Both technologies are

also poised to see large percentage growth and volumes reaching 40-50 million

units by 2030.

As the leader in Automotive Semiconductors, Advanced Driver Assistance

Systems (ADAS), NXP offers a broad portfolio of Radar sensing and

processing, Vision processing, Secure V2X and Sensor Fusion technologies

that drive innovation in autonomous cars.

See more on our website.