Why do we talk about radar systems at all? Every year, about 1.3 million

people die on the world’s roads, and millions more are severely injured. The adoption

of advanced driver-assistance systems

(ADAS)

with radar technology are crucial to

safer driving, avoiding accidents and saving lives.

Radar adoption is significantly accelerating by mandates across regions and

regional New Car Assessment Program (NCAP) ratings. Many regions, for example,

have issued legislation or five-star safety ratings for making certain

features mandatory, such as automatic emergency braking, blind-spot detection

or vulnerable road user detection.

Figure 1: Levels of ADAS and autonomous driving

Figure 1: Levels of ADAS and autonomous driving

The society of automobile engineers (SAE) defines six levels of driving

automation, where L0 is no automation, and, step-by-step, ADAS is evolving to

driver assistance, partial automation, conditional automation, and eventually

up to fully autonomous L5 vehicles. These directives are driving ADAS adoption

and to higher levels of automation.

The L2 to L3 Leap

While the automotive OEMs navigate the many design complexities required to

achieve L3 conformance, where the vehicle OEM assumes the accident liability,

not the driver, attention has turned to and is driving a transitional level.

In regards to sensor technology, there is a significant difference in the leap

from L2 to L3. L2+ provides the L3 like capabilities but with the driver

available as a backup, reducing the additional need for redundancy.

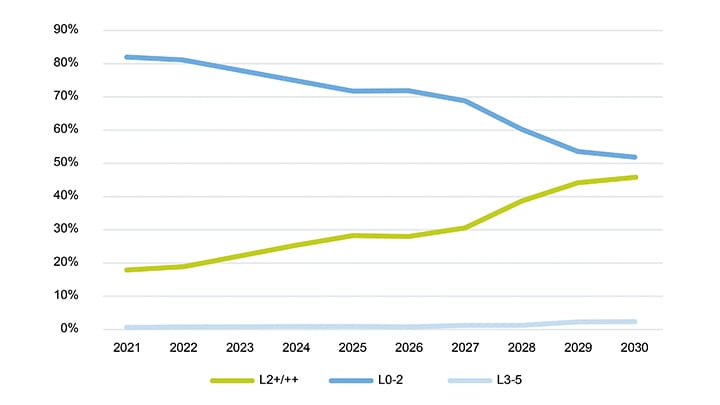

Figure 2: Forecast market shift from L2+ to autonomous driving (2021 – 2030)

Figure 2: Forecast market shift from L2+ to autonomous driving (2021 – 2030)

A recent Yole Development report indicates that the uptake for L2+ vehicles is

likely to grow steadily as L0 – L2 vehicles begin to subside, achieving almost

50% market share by 2030. L2+ also allows OEMs to gradually roll out advanced

safety and comfort features, allowing more time for sensor technologies to

mature. The driver continues to provide redundancy in the interim, and the

OEMs can optimize the balance between features and costs and gradually

introduce L3 ‘light’ vehicles.

Sensor Technology—No Single Solution

Three primary sensor technologies enable ADAS and autonomous driving – radar,

camera, and light detection and ranging (LiDAR). Each has its unique strengths

and weaknesses, and ultimately, there is no prevailing sensor technology

solution.

Radar and camera sensors are largely complementary technologies and are widely

deployed in L1 and L2 vehicles, owing to their maturity and affordability.

Radars, for example, are excellent at measuring speed, distance, but cannot

capture color information. The resolution of angle-of-arrive measured by

conventional radars is notably lower than what can be derived from camera and

LiDAR sensors. In contrast, cameras are best for pattern and colour detection,

but might struggle with environmental effects. It can, for example, be blinded

by bright light, not see at night or struggle with fog or snow. On the other

hand, radar sensors work very robustly and reliably on bright sunny days and

at night and in almost all weather conditions.

LiDAR’s primary differentiating features are its ultra-precise angular

resolution, both horizontally and vertically, and its fine resolution at

range. These strengths make it well-suited for high-resolution 3D environment

mapping, giving it the ability to detect free spaces, boundaries and

localization accurately. However, it shares some common weaknesses with camera

sensors, having susceptibility to harsh weather or road conditions. Yet the

biggest hurdle for mass adoption in mainstream passenger L2+ and L3 vehicles

is its cost. Here, the advent of 4D imaging radar with its significantly

enhanced fine resolution over conventional radar is proving a compelling

alternative to LiDAR.

Imaging Radar Evolution

In the early days, radar technology was mainly used for seeing other cars.

Essentially, these were 2D-capable sensors that measured speed and distance.

However, today’s state-of-the-art radar technologies are essentially 4D

capable sensors. In addition to measuring speed and range, 4D sensors measure

horizontal and vertical angles. This capability allows the vehicle to see cars

and, more importantly, pedestrians, bicycles and smaller objects.

Figure 3: Imaging radar can differentiate between cars, pedestrians and

other objects.

Figure 3: Imaging radar can differentiate between cars, pedestrians and

other objects.

At the bottom end (L2+), the focus is on having a 360-degree cocoon around the

vehicle (the industry buzzword for this is 'corner radar'). As the name

suggests, there are at least four, but often six or seven fine resolution

radar sensor nodes as there might be additional ‘gap filler’ radars to the

side. Seeing a child standing between two parked cars in low-light conditions

becomes possible for urban autopilot. At the high-end, L4 and above, vehicles

can see smaller objects and go into higher resolution, using imaging radar for

full environmental mapping around the vehicle and looking in the far distance

ahead or behind to avoid a hazard by detecting it and taking proper actions

well in advance. Distances reaching 300 meters, or even beyond, are possible

in the future. Highway autopilot will be able to detect and react to a

motorcycle traveling alongside a truck approaching with high speed from

behind.

The Future of Imaging Radar

Key technology ingredients that have enabled this evolution in imaging radar

were the migration from 24 to 77GHz, technologies like gallium arsenide (GaAs)

or silicon germanium (SiG) to standard plain RF CMOS. Other advancements

include going from low to high channel count advanced MIMO configurations and

from basic processing to high-performance processing with dedicated

accelerators and DSP cores, as well as advanced radar signal processing

techniques.

With all of these technologies combined, NXP has developed the

S32R45

radar processor that, together with the

TEF82xx RF-CMOS

radar transceivers, is capable of delivering imaging like point clouds with

moderate antenna count. This technology provides a clear, cost-effective

pathway for OEMs to deliver 4D imaging radar capabilities for L2+ and higher

commercial volumes and cost structure. Alongside are the essential

peripherals, such as safe power management and in-vehicle network components.

All of this together makes radar node and NXP is positioned to cover the

complete system.

For more in-depth information about imaging radar, take the imaging radar session in the Driving Automation and Radar Academy.

Also take a look at our 4D Imaging Radar live demonstration to watch our

technology at work