- Smarter World Blog

- NXP's Quest for Safe and Secure Automotive AI

NXP's Quest for Safe and Secure Automotive AI

The interaction between safety and security is a recurrent topic in our discussions with NXP colleagues in both formal and informal settings. The topic can become particularly messy when adding automotive and artificial intelligence to the mix.

In a blog entry from last year—Future Challenges: Making Artificial Intelligence Safe—we mentioned that in NXP we’re preparing the terrain by setting strong foundations, using the NXP whitepaper on morals of algorithms and Auto eIQ as examples. We are not alone in our quest for safe and secure automotive AI. In Germany, BSI and ZF, together with TuV Nord, are now trying to figure out how to test AI in cars. The German Research Center for Artificial Intelligence (DFKI) and TuV SUD are also looking at roadworthiness tests for AI systems. Although it is not yet clear what these tests will entail, we continue building on our foundation.

How Future Vehicles Could Use ML/AI

On the inference side, we are convinced that future vehicles will use AI and machine learning to provide safety-related functions. That is why we’ve partnered with ANITI and ONERA to investigate the effects of random hardware faults in AI-based systems. The effort goes beyond what is state-of-the-art as established by functional safety standards (such as ISO 26262) since these have explicitly excluded machine learning from their scope.

One of the focus areas of this collaboration is fault injection. Fault injection is a technique used to (1) verify the effectiveness of safety mechanisms, (2) justify the robustness of a particular design to random hardware faults and (3) verify in the field that a device can detect faults as foreseen. Determining the link between the impact of random hardware faults at the edge and machine learning models is key to establishing the trust necessary to rely on them in the real world. Without trust, we will not be able to use machine learning and AI for safety-related functions needed for autonomous vehicles.

How Can Safety Be Used to Establish Trust?

Apropos of establishing trust, we understand that safety of occupants in a vehicle that, in a near future, will be running an AI safety-related function goes beyond flawless execution of the ML model. In this case, the focus shifts from the hardware itself to the quality of the model.

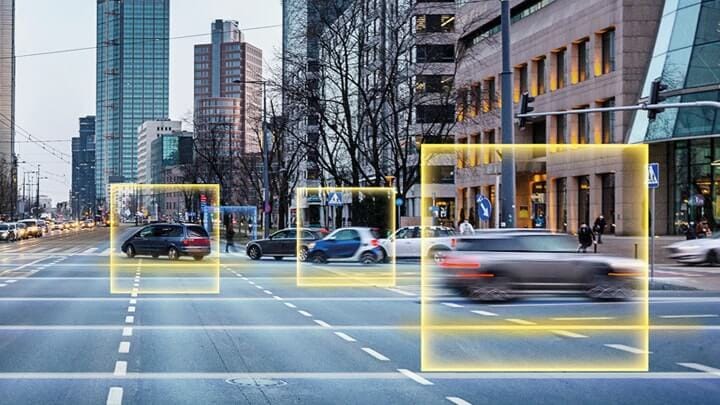

It is common to perform an evaluation via a metric that relates to the average performance of the model. It is typical to use accuracy to define the ratio of correct classification. In object detection (which is necessary for radar) the mAP is typically the metric of choice. In addition to the correct classification of an object, the mAP also covers the quality of the derived bounding box.

However, we believe that to have trust in a model, this is not sufficient. These metrics do not distinguish strange mistakes from human errors that are understandable, simply because an object has, for instance, a rare shape.

Furthermore, in case of a strange mistake, solving it requires knowledge on what caused it. Also if a prediction is correct, the prediction may still be based on an incorrect bias, which you want to prevent.

How to Understand AI Algorithms

In order to deal with these issues, we need methods to open the black box that AI algorithms typically are and make the predictions understandable by humans. An interesting example of such a method for neural networks is Grad-CAM. In a paper published in 2019 by Ramprasaath R. Selvaraju et. al., the authors explain how by multiplying feature maps with their gradients, we can determine which parts of an input are most important for the model to come to its prediction. This gives users a very valuable tool to better understand what a model has learned in order to come to its predictions.

This creates more trust in the model and can help the developer to expose shortcomings in the training set that can then be resolved.

As an example provided by the authors, two different models are trained to identify doctors or nurses. Grad-CAM provides an overlay (similar to a heat map) to tell a human which sections of a picture were used to make a decision. In the first model (“biased”), the model uses facial characteristics to make its choice. This is an unwanted situation, as it might decide for a “nurse” given that the training set was biased (more pictures of female nurses and more pictures of male doctors). After analysis and retraining, the second model is now making decisions based on other elements. Grad-CAM is used to verify that these elements are desired (such as a stethoscope).

This example can easily be extrapolated to safety-related functions. In the case of driver monitoring systems detection, Grad-CAM could help ensure unbiased training of driver fatigue markers.

NXP continues to invest in solutions for making AI safe and secure. By establishing trust in training data and ensuring flawless execution of ML models, even in case of random hardware faults, we work towards a bright, safe future.

Authors

Andres Barrilado

Andres works as a Functional Safety Assessor in the central NXP team. In the past Andres has acted as a Safety Architect for radar front-end devices, and also as an applications engineer for automotive sensors. He lives in Toulouse, and in his free time he enjoys travelling with his wife, running, and being exposed to unknown situations.

Wil Michiels

Wil Michiels is a security architect at NXP Semiconductors who focuses on security innovations to enhance the security and trust of machine learning. Topics of interest include model confidentiality, adversarial examples, privacy, and interpretability.